VR Shared - Meta Avatars integration

Overview

The scope of this sample is to explain how to integrate Meta avatars in a Fusion application using the shared topology.

The main focus is to :

- synchronise avatars using Fusion networked variables

- integrate lipsync with Fusion Voice

Technical Info

This sample uses the Shared Mode topology,

The project has been developed with :

- Unity 2021.3.23f1

- Oculus XR Plugin 3.3.0

- Meta Avatars SDK 18.0

- Photon Fusion 1.1.3f 599

- Photon Voice 2.50

Before you start

To run the sample :

Create a Fusion AppId in the PhotonEngine Dashboard and paste it into the

App Id Fusionfield in Real Time Settings (reachable from the Fusion menu).Create a Voice AppId in the PhotonEngine Dashboard and paste it into the

App Id Voicefield in Real Time SettingsThen load the

Scenes\OculusDemo\Demoscene and pressPlay

Download

| Version | Release Date | Download | ||

|---|---|---|---|---|

| 1.1.3 | May 05, 2023 | Fusion VR Shared Meta Avatar Integration 1.1.3 Build 191 | ||

Handling Input

Meta Quest

- Teleport : press A, B, X, Y, or any stick to display a pointer. You will teleport on any accepted target on release.

- Grab : first put your hand over the object and grab it using controller grab button

The sample support the Meta hand tracking. So, you can use the pinch gesture to teleport or grab an object.

Folder Structure

The /Oculus folder contains the Meta SDK.

The /Photon folder contains the Fusion and Photon Voice SDK.

The /Photon/FusionXRShared folder contains the rig and grabbing logic coming from the VR shared sample, creating a FusionXRShared light SDK that can be shared with other projects.

The /Photon/FusionXRShared/Extensions folder contains extensions for FusionXRShared, for reusable features like synchronized rays, locomotion validation, ...

The /Photon/FusionXRShared/Extensions/OculusIntegration folder contains prefabs & scripts developped for the Meta SDK integration.

The /StreamingAssets folder contains pre-built Meta avatars.

The /XR folder contain configuration files for virtual reality.

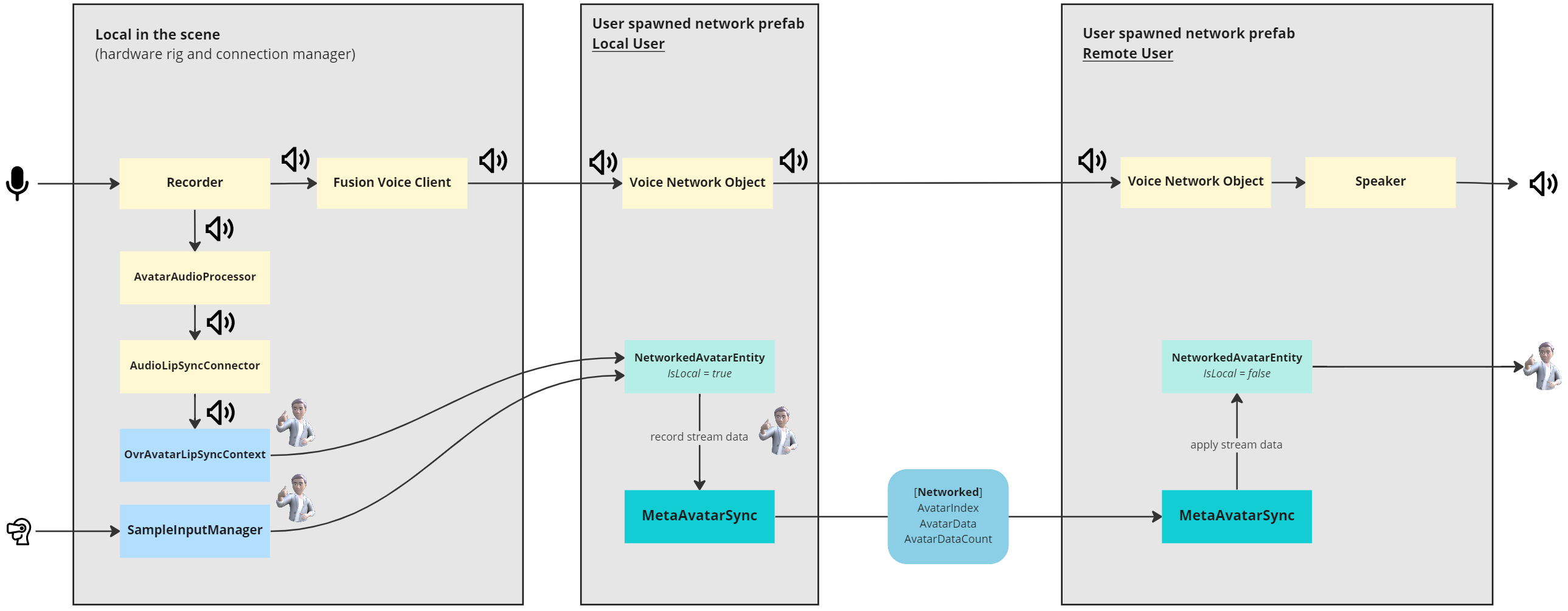

Architecture overview

To understand how the rig is setup, or any other details regarding this sample, please refer to the main VR Shared page first.

Scene setup

OVR Hardware Rig

The scene contains an hardware rig compatible with the Oculus SDK. The subobject MetaAvatar includes everything related to the Meta avatar.

The

LipSynchas theOVRAvatarLipSyncContextcomponent : it is provided by the Oculus SDK to setup the lipsync feature.The

BodyTrackinghas theSampleInputManagercomponent : it is the one coming from the Oculus SDK (Asset/Avatar2/Example/Common/Scripts). It is a derived class from theOvrAvatarInputManagerbase class and it refers to theOVR Hardware Rigfor setting tracking input on an avatar entity.The

AvatarManagerhas theOVRAvatarManagercomponent : it is used to load Meta avatars.

ConnectionManager

The ConnectionManager handles the connection to the Photon Fusion server and spawns the user network prefab when the OnPlayerJoined callback is called.

In order to stream the voice over the network, we need the Fusion Voice Client. The primary recorder field refers to the Recorder gameobject located under the ConnectionManager.

For more details on Photon Voice integration with Fusion, see this page: Voice - Fusion Integration.

The ConnectionManager game object is also in charge of asking for the microphone authorization thanks to the MicrophoneAuthorization component. It enables the Recorder object when the microphone access is granted.

The subobject Recorder is in charge of connecting to the microphone. It contains the AudioLipSyncConnector component which receive the audio stream from the Recorder and forward it to the OVRAvatarLipSyncContext

AudioSource and UpdateConnectionStatus are optionals. It gives audio feedback on INetworkRunner callbacks events.

User Spawned Network Prefab

The ConnectionManager spawns the user network prefab OculusAvatarNetworkRig Variant when the OnPlayerJoined callback is called.

This prefab contains :

MetaAvatarSync: it is in charge to select a random avatar at start and streaming the avatar over the network.NetworkedAvatarEntity: it is derived from the OculusOvrAvatarEntity. It is used to configure the avatar entity depending on whether the network rig represents the local user or a remote user.

Avatar Synchronization

When the user networked prefab is spawned, a random avatar is selected by MetaAvatarSync.

C#

AvatarIndex = UnityEngine.Random.Range(0, 31);

Debug.Log($"Loading avatar index {AvatarIndex}");

avatarEntity.SetAvatarIndex(AvatarIndex);

Because AvatarIndex is a networked variable, all players will be updated when this value changes.

C#

[Networked(OnChanged = nameof(OnAvatarIndexChanged))]

int AvatarIndex { get; set; } = -1;

C#

static void OnAvatarIndexChanged(Changed<MetaAvatarSync> changed)

{

changed.Behaviour.ChangeAvatarIndex();

}

The SampleInputManager component on the hardware rig tracks the user's movements.

It is referenced by the NetworkedAvatarEntity if the player network rig represents the local user.

This setting is done by the MetaAvatarSync (ConfigureAsLocalAvatar()).

At each LateUpdate(), MetaAvatarSync captures avatar data for the local player.

C#

private void LateUpdate()

{

// Local avatar has fully updated this frame and can send data to the network

if (Object.HasInputAuthority)

{

CaptureAvatarData();

}

}

The CaptureLODAvatar method gets the avatar entity stream buffer and copies it into a network variable called AvatarData.

The capacity is limited to 1200 as it is enough to stream Meta avatars in medium or high LOD.

Please note that, for simplification, we stream only the medium LOD in this sample.

The buffer size AvatarDataCount is also synchronized over the network.

C#

[Networked(OnChanged = nameof(OnAvatarDataChanged)), Capacity(1200)]

public NetworkArray<byte> AvatarData { get; }

[Networked]

public uint AvatarDataCount { get; set; }

So, when the avatar stream buffer is updated, remote users are informed and apply the received data on the network rig representing the remote player.

C#

static void OnAvatarDataChanged(Changed<MetaAvatarSync> changed)

{

changed.Behaviour.ApplyAvatarData();

}

Loading personalized Meta avatar

Loading the local user avatar

To load the local user avatar, during the Spawned callback, if the network object is associated to the local user, the NetworkedAvatarEntity asks for the user's account avatar with LoadLoggedInUserCdnAvatar().

C#

/// <summary>

/// Load the user meta avatar, and store its user id in the associated listener

/// Note: _deferLoading has to been set to true for this to be working

/// </summary>

async void LoadUserAvatar(NetworkedAvatarEntity.IUserIdListener listener)

{

Debug.Log("Loading Cdn avatar...");

LoadLoggedInUserCdnAvatar();

while (_userId == 0)

{

await Task.Delay(100);

}

Debug.Log("Avatar UserId: " + _userId);

if(listener != null)

listener.UserId = _userId;

}

This method set the UserId which is a [Networked] var, so that the user id will be synchronized on all clients.

C#

[Networked(OnChanged = nameof(OnUserIdChanged))]

public ulong UserId { get; set; }

Please note that to be able to load the user avatar :

Defer Loadingshould be set to true on theNetworkedAvatarEntitycomponent of the player's prefab: it will prevent the avatar to be automatically loaded at start.syncUserIdAvatarhas to be set to true on theNetworkedAvatarEntitycomponent forLoadUserAvatar()to be called (ifsyncUserIdAvataris set to false andDefer Loadingis set to false too, a random local avatar will be automatically loaded).

Loading the remote users' avatar

Upon reception of UserId, remote users will trigger the downloading of the avatar associated with this id:

C#

static void OnUserIdChanged(Changed<MetaAvatarSync> changed)

{

changed.Behaviour.OnUserIdChanged();

}

void OnUserIdChanged()

{

if(Object.HasStateAuthority == false)

{

Debug.Log("Loading remote avatar: "+UserId);

avatarEntity.LoadRemoteUserCdnAvatar(UserId);

}

}

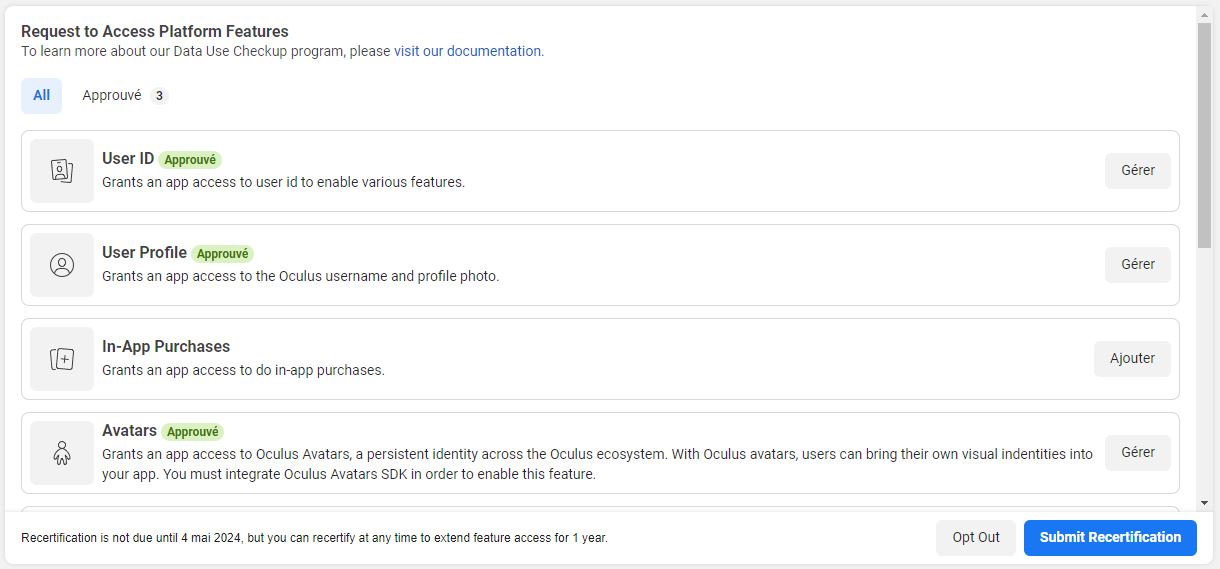

Access to Meta avatar

To be able to load Meta avatars, you have to add the App Id for your application in the Oculus > Oculus Platform Unity settings menu.

This App Id can be found in the API > App Id field on your Meta dashboard.

Also, this application has to have a completed Data use checkup section, having required User Id, User profile and Avatars access:

Testing users' avatars

To be able to see in development the avatar associated with the local Meta account, the local user account must be a member of the organization associated with the provided App Id in the Oculus Platform settings.

To see your avatars in cross platform setup (between a Quest and a desktop build), you will need to have your Quest and Rift application grouped, as specified in the Group App IDs Together chapter on this page: Configuring Apps for Meta Avatars SDK

LipSync

The microphone initialization is done by the Photon Voice Recorder.

The OvrAvatarLipSyncContext on the OVRHardwareRig is configured to expect direct calls to feed it with the audio buffer.

A class hooks on the recorder audio read, to forward it to the OvrAvatarLipSyncContext, as detailed below.

The Recorder class can forward the read audio buffers to classes implementing the IProcessor interface.

For more details on how to create a custom audio processor see this page: Photon Voice - FAQ.

To register such a processor in the voice connection, a VoiceComponent subclass, AudioLipSyncConnector, is added on the same gameobject as the Recorder.

This led to the reception of the PhotonVoiceCreated and PhotonVoiceRemoved callbacks, allowing to add a post processor to the connected voice.

The connected post-processor is an AvatarAudioProcessor, implementing IProcessor<float>.

During a player connection, the MetaAvatarSync component searchs for the AudioLipSyncConnector located on the Recorder to set the lipSyncContext field of this processor.

Doing so, each time the AvatarAudioProcessor Process callback is called by the Recorder, ProcessAudioSamples is called on the OvrAvatarLipSyncContext with the received audio buffer, ensuring that the lip synchronization is computed on the avatar model.

This way, the lip sync will be streamed along with the other avatar body info, when captured with RecordStreamData_AutoBuffer on the avatar entity, done during the late update of MetaAvatarSync.

To Resume

So, to resume, thanks to the MetaAvatarSync ConfigureAsLocalAvatar() method, when the user network prefab is spawned for the local user, the associated NetworkAvatarEntity received datas from :

OvrAvatarLipSyncContextfor lipsyncSampleInputManagerfor body tracking

Datas are streamed over the network thank to networked variables.

While when an user network prefab is spawned for a remote user, the MetaAvatarSync ConfigureAsRemoteAvatar() is called and the associated NetworkAvatarEntity class builds & animates the avatar thanks to datas streamed.